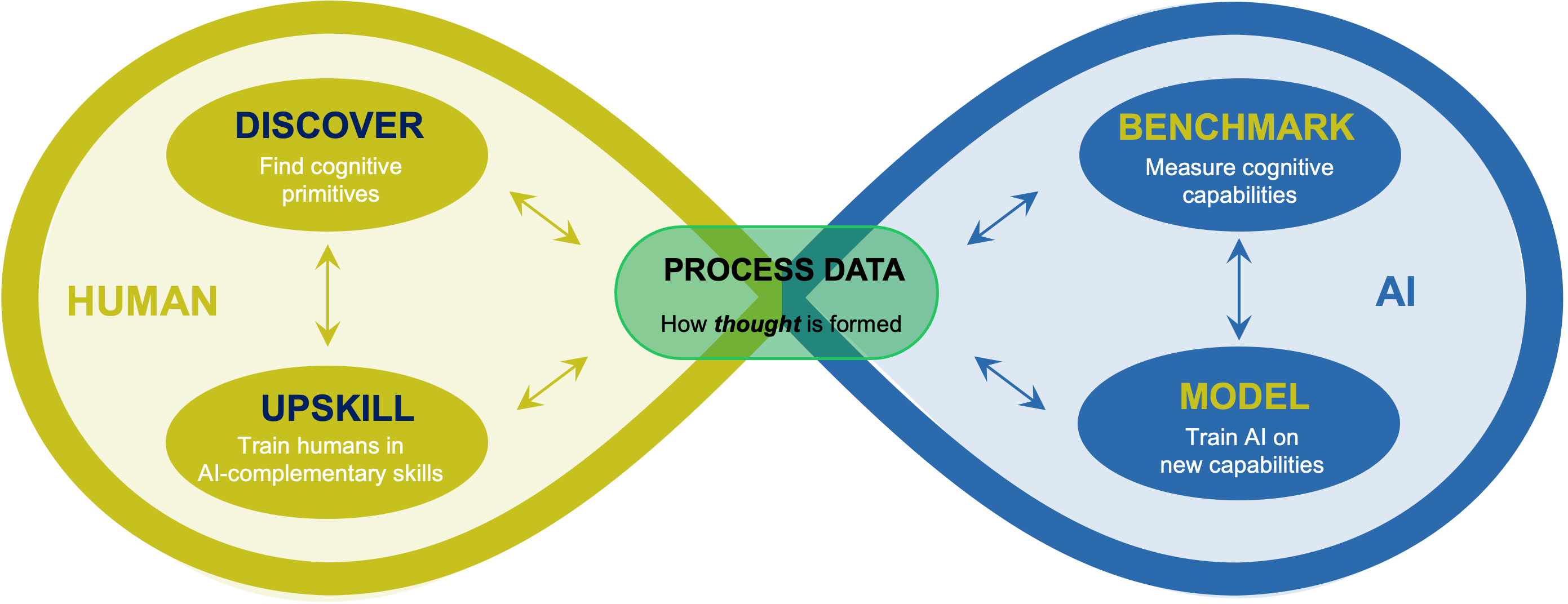

Process Data: The Missing Link

Most AI training data captures results—final outputs. What's missing is Process Data: how thought forms, reasoning traces, decision branches, uncertainty calibration.

Our human training programs generate this Process Data at scale. This dataset doesn't exist anywhere else—and it's key to training AI on cognitive dimensions that matter.

75%

of employees fear AI will eliminate their jobs

80%+

would embrace AI if fear barriers addressed

The Centaur Principle

After Deep Blue, Kasparov discovered that weak human + machine + better process beats strong computer alone. We don't replace humans. We create Centaurs—human-AI teams that outperform either component.

People resist tools that replace them—but embrace tools that upgrade them. When employees see AI as amplifying their capabilities rather than threatening livelihoods, adoption accelerates.

The co-evolution model isn't wishful thinking. It's how complex systems naturally evolve. Predators and prey, immune systems and pathogens—mutual pressure creates mutual advancement.